Assume we have the following table:

| create table t1

(

id int not null identity (1,1) primary key

,status tinyint not null default 0

,filler nchar(4000) not null default '#'

)

go

insert t1(status)

select top 10000 1

from sys.fulltext_system_stopwords as a

,sys.fulltext_system_stopwords as b

go

update top(100) t1

set status = 0 |

Table t1 has 10,000 rows and also 10,000 pages at the leaf level of the clustered index (one row per page, since I’ve added the filler column which is 8,000 bytes wide). I’ve decided creating the test table like this (one row per page), since it eases further calculations.

I was using a table similar to this, when collecting data during the day that had to be processed in a nightly batch job. To distinguish between new arrived rows and already processed ones, the status column is used. Let’s just say, we use a status value of 1 to mark rows that have been processed and that a value of 0 is flagging all rows that are ready to be processed. So, at the moment there are 10,000 rows in total, where 100 rows (with status=0) are waiting to be handled.

For all rows that could be successfully handled, the nightly batch will set the value of the status column to 1 at the end. Usually, if all rows could be properly processed, all values of the status column will be at 1 after the nightly batch has accomplished its work.

So let’s say, all rows could be handled successfully and no new rows will be arriving during the nightly batch. What’s the difference than between the following two statements:

Statement 1:

| update t1 set status = 1

where status != 1 |

Statement 2:

Logically, there’s no difference. Both statements will set the value of the status column to 1 for all existing rows. But we have only 100 rows with status != 1 in place, so will there be any noticeable difference in the number of writes and the amount of dirty pages in the buffer cache after executing each of the two UPDATE statements? Is SQL Server smart enough to detect that only 100 rows are affected (that is also 100 pages in our case) for both queries, or is the update without a predicate really touching all rows/pages?

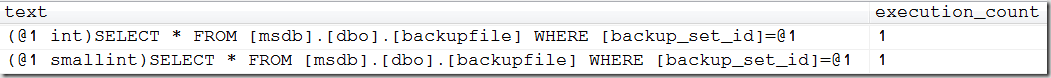

Well, let’ see. For each of the following experiments I have repeated the creation of the test table before executing one of the two UPDATE statements. Additionally, I have performed the following script right before starting the UPDATE:

| checkpoint 10

go

dbcc dropcleanbuffers

dbcc freeproccache |

This is to make sure that all modified pages have been written to disk before examining the number of dirty pages that our UPDATE will create.

For examining the performed number of writes, I queried the column last_logical_writes of the DMV sys.dm_exec_query_stats. The number of dirty pages after each UPDATE was simply obtained by utilizing the DBA’s all purpose tool DBCC. DBCC MEMORYSTATUS reveals a regarding section (almost at the end) containing buffer pool information which looks like this:

We start with the first statement, containing the predicate WHERE status != 1. I expect to see only 100 pages been written (100 logical writes) and almost the same amount of dirty pages (may be some more, since the value obtained by DBCC MEMORYSTATUS is server-wide).

Ok, the numbers are as expected. My experiment shows 100 logical writes and 115 dirty pages. Nor surprise here.

My second experiment uses statement 2 (that one without a predicate). This time, the result is surprising. Again the UPDATE shows 100 logical writes and a similar amount of dirty pages (135). That’s really amazing, isn’t it? SQL Server is smart enough to write only modified data and not touches unchanged rows, even if we, let’s say, forget the predicate. I didn’t expect this result.

Including the OUTPUT clause

What will happen if we incorporate an OUTPUT clause into our UPDATE statements? Via the OUTPUT clause I do have access to all modified data by using the virtual DELETED and INSERTED tables. Actually, two questions arise, when not specifying the predicate WHERE status != 1:

- Will we see all rows in the OUTPUT? Technically, only 100 rows are affected, but logically we have specified that all rows have to be modified. So, how many rows are returned by OUTPUT inserted.*?

- If OUTPUT inserted.* returns all rows, how many writes and dirty pages can be seen this time?

Here come the answers:

- If no predicate is specified, the OUTPUT clause returns all rows, regardless of the fact that, technically, only 100 rows are affected by the UPDATE.

- Incorporating the OUTPUT clause does have no effect on the number or necessary writes and also no impact on the quantum of dirty pages.

I’d say, this is as expected.

Introducing an Update Trigger

For the next experiments, I’ve also created an UPDATE TRIGGER like this:

| create trigger tr_t1

on t1 for update as

begin

-- do nothing

declare @x int

end

go |

The trigger doesn’t do anything particularly useful, but let’s see if its only existence changes something.

It does!

With the trigger in place, the number of writes and also the amount of dirty pages increase dramatically, if no predicate is specified (so all rows are affected by the UPDATE). In this case I’ve observed number of writes as well as the total of dirty pages are both around 40,000! That’s about 400 times higher than without a trigger. So, the next CHECKPOINT has to write out about 40,000 pages this time, instead of only around 100 before. Big change!

I think, the reason for this specific behavior lies in the pure existence of the virtual tables INSERTED and DELETED inside the trigger. The trigger is fired after the update has been performed, and these two virtual tables have to contain all tables’ rows, when no predicate is specified. It seems, SQL Server has to modify rows in order to have them included in INSERTED and DELETED tables.

I could also observe reads from tempdb, when the trigger is present. That’s as expected, since INSERTED and DELETED tables are hosted inside tempdb.

Somewhat strange, since the behavior for the OUTPUT clause is different, although both cases (OUTPUT and TRIGGER) are pretty similar.

So, despite the trigger does nothing, it is affecting query performance and resource utilization! Not because of the trigger’s code, but simply because of its pure existence. Just one more reason to be careful with triggers!

Wrapping up

The following table contains all measured results.

| Trigger | Predicate | Logical Writes | Dirty Pages |

| No Trigger | No Predicate | 100 | 135 |

| WHERE status!=1 | 100 | 115 |

| FOR UPDATE | No Predicate | 40,101 | 40,107 |

| WHERE status!=1 | 501 | 556 |